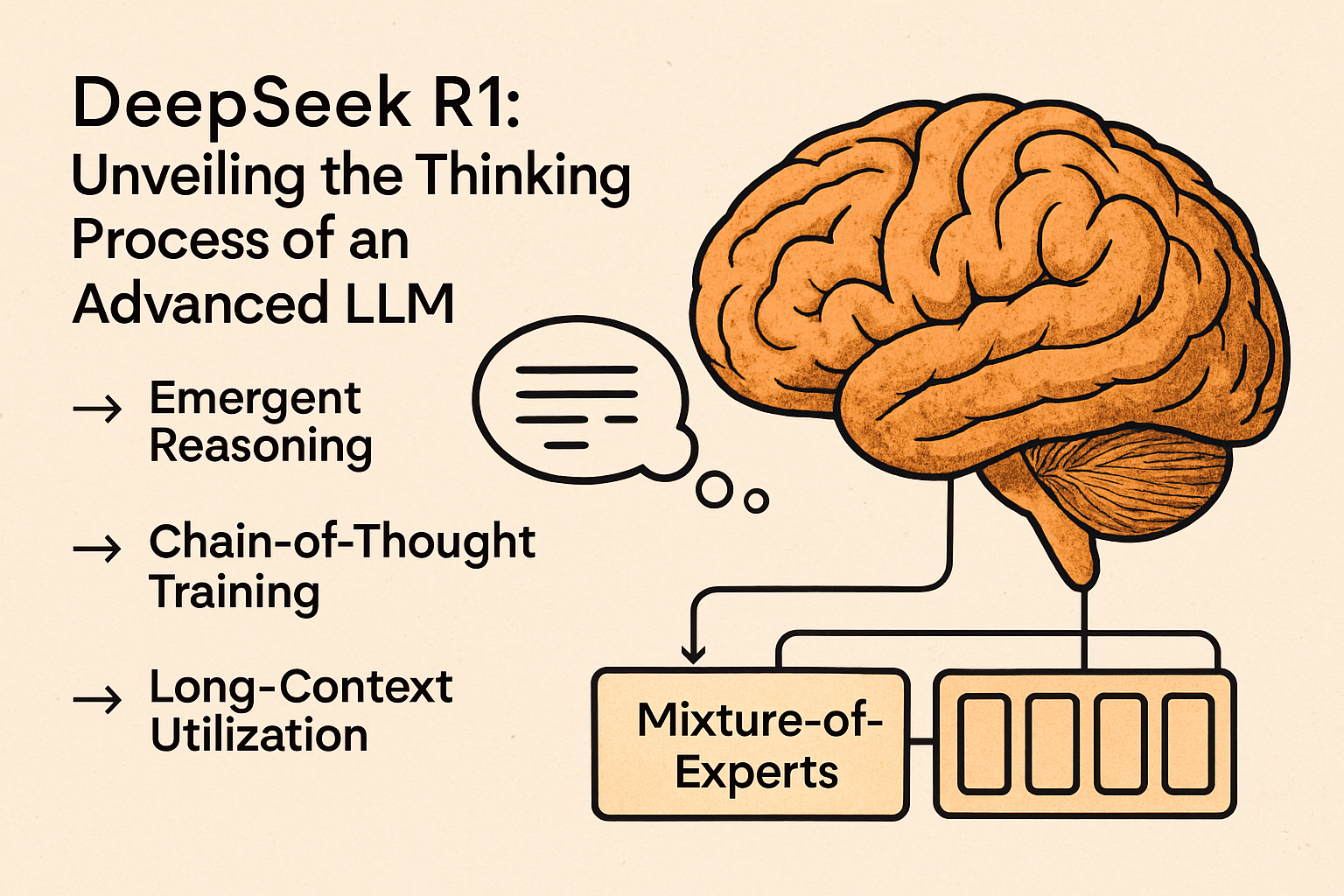

DeepSeek R1: Unveiling the Thinking Process of an Advanced LLM

Posted on March 08, 2024

A deep dive into how DeepSeek R1 'thinks' – exploring emergent reasoning, structured chain-of-thought training, lossless long-context handling, and a Mixture-of-Experts architecture that together form the brain behind this advanced LLM.

Peering into the Mind of DeepSeek R1

Discover how DeepSeek R1 exhibits emergent, step-by-step reasoning, using a structured chain-of-thought training regimen, cutting-edge long-context processing, and a specialized Mixture-of-Experts approach.

DeepSeek R1 isn’t just another black-box LLM—it’s designed to “think” by laying out its reasoning in clear, interpretable steps. This blog post explores how its training and inference methods empower it to solve complex tasks while showing you every step along the way.

Emergent Reasoning: When Thinking Becomes Natural

DeepSeek R1's ability to reason isn't pre-programmed—it emerges from its training. Instead of following rigid, hardcoded instructions, the model learns to break down problems and correct itself through reinforcement learning. Imagine a student who, over time, learns that showing their work leads to better grades. That's exactly what DeepSeek R1 does.

- Reinforcement Learning Rewards: The model is rewarded not only for arriving at the correct answer but also for generating detailed intermediate steps.

- No Explicit Self-Reflection Loop: There is no separate "self-check" module; self-correction emerges naturally as the model's confidence in its tokens guides the generation process.

Structured Chain-of-Thought Training: The Power of <think> Tags

To encourage clarity in its reasoning, DeepSeek R1 was trained using structured chain-of-thought data. Special markers, such as <think> and <answer>, are embedded in the training data to delineate the reasoning phase from the final output.

This training strategy teaches the model to output its reasoning in a clear, step-by-step manner—much like a detailed blog post or a whiteboard explanation.

Example Training Format:

<think>

Step 1: Understand the problem.

Step 2: Evaluate possible approaches.

Step 3: Decide on the most efficient solution.

</think>

<answer>

[Final answer/code]

</answer>

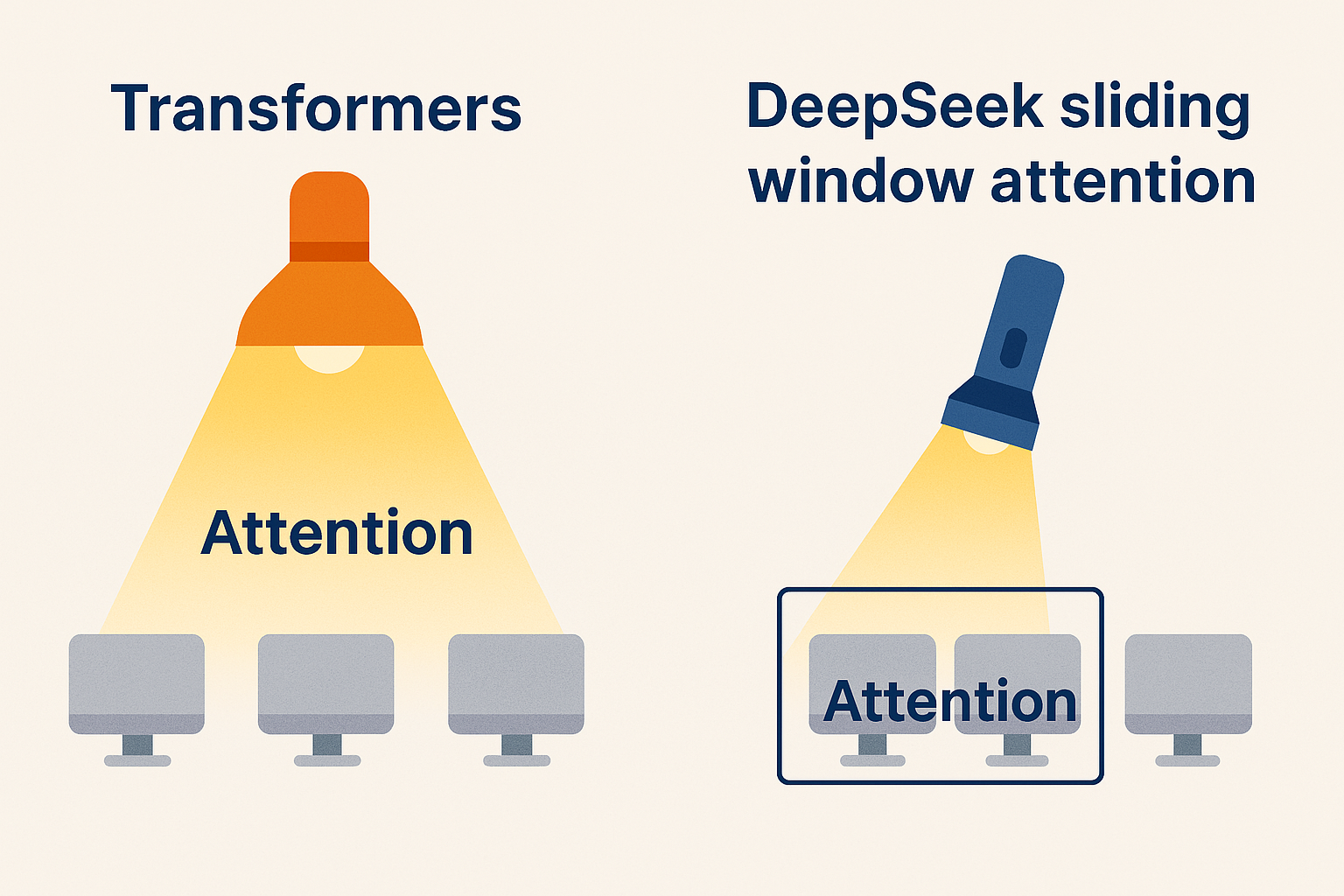

Long-Context Utilization: Handling 128K Tokens

A standout feature of DeepSeek R1 is its ability to process extremely long contexts—up to 128,000 tokens. This is achieved by:

- Sliding Window Attention: Instead of attending to every token at once, the model uses a sliding window to focus on the most relevant information.

- Memory Compression & Token Prioritization: The older, less critical parts of the input are compressed without losing important details, ensuring that the model retains full context even in lengthy documents.

This means that DeepSeek R1 can keep track of details across long texts, making it ideal for applications that require understanding extensive content.

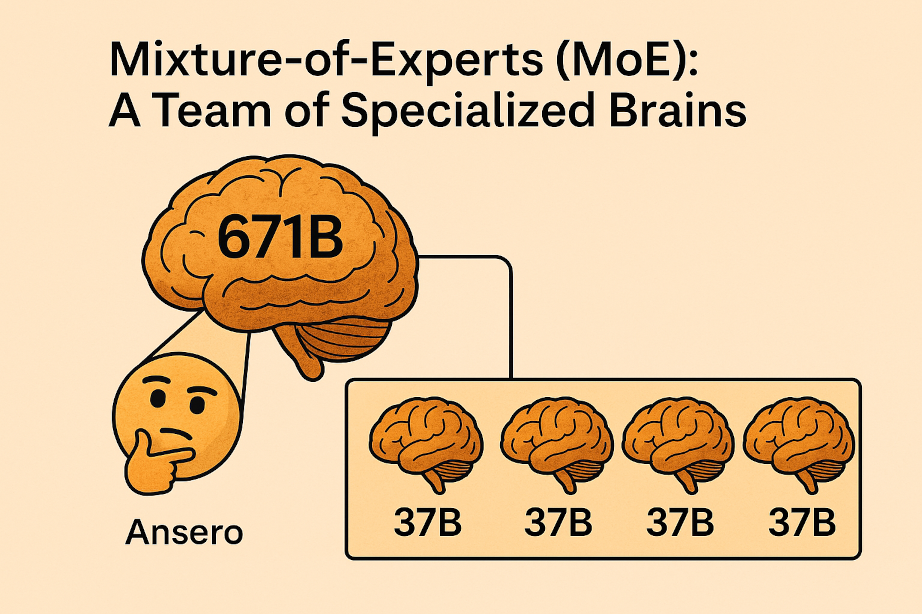

Mixture-of-Experts (MoE): A Team of Specialized Brains

DeepSeek R1 leverages a Mixture-of-Experts (MoE) architecture, allowing it to route different tasks to specialized "expert" subnetworks.

-

How It Works:

While the overall model is massive (671B parameters), only a fraction (approximately 37B) is activated for any given token. This is like consulting a panel of specialists—each expert is skilled in areas such as coding, logic, or language—ensuring that the most relevant knowledge is applied to the task. -

Why It Matters:

This efficient, dynamic routing means DeepSeek R1 not only performs better but also handles complex reasoning tasks with greater accuracy and speed.

A Coding Example: Solving the Two-Sum Problem

To illustrate DeepSeek R1’s thinking process, consider the classic Two-Sum Problem.

Problem Statement

“Given an array of integers and a target value, find the indices of two numbers in the array that add up to the target.”

DeepSeek R1’s Step-by-Step Reasoning

When queried with this problem, DeepSeek R1 might generate an output like:

Reasoning and Solution:

<think>

1. The problem requires finding two indices such that nums[i] + nums[j] equals the target.

2. A brute-force approach (checking every pair) is O(n²), which is inefficient.

3. An optimal solution is to use a hash map for O(n) time by storing each number's index.

4. For each number, check if the target minus that number exists in the hash map.

</think>

<answer>

def two_sum(nums, target):

seen = {}

for i, value in enumerate(nums):

comp = target - value

if comp in seen:

return [seen[comp], i]

seen[value] = i

</answer>

Breaking Down the Example

Chain-of-Thought Process:

The model starts by restating the problem, weighing different approaches, and then outlining an efficient plan.

Final Code Solution:

It produces a Python function that implements the hash map strategy exactly as reasoned in the thought process.

This example demonstrates how DeepSeek R1 uses structured chain-of-thought training to produce transparent, step-by-step reasoning before delivering a final answer.

This example demonstrates how DeepSeek R1 uses structured chain-of-thought training to produce transparent, step-by-step reasoning before delivering a final answer.

Conclusion

DeepSeek R1 is not just another language model—it’s a breakthrough in how AI can reason, self-correct, and process massive amounts of information. By training with structured chain-of-thought data and leveraging a Mixture-of-Experts architecture, it produces transparent, step-by-step solutions even for complex coding tasks. Its ability to manage up to 128K tokens opens up new possibilities for applications that require deep understanding of extended content.

Whether you’re a developer, researcher, or simply an AI enthusiast, DeepSeek R1’s approach to emergent reasoning offers a glimpse into the future of intelligent systems—where machines not only deliver answers but also show you exactly how they arrived at them.

Feel free to share your thoughts and join the conversation as we explore what it means for an AI to truly “think” like a human.